Product Line Engineering and Model Based Systems Engineering – Overview

This article is part of a monthly series entitled “Product Line Engineering and Model-Based Engineering“.

This article is part of a monthly series entitled “Product Line Engineering and Model-Based Engineering“.

This article concerns document generation from MBSE models. We explain why and how to use the document generation capability provided by modelling tools to get the most out of your Systems Engineering models.

We start by explaining why generating documents from your models is useful. This can seem obvious, but it is not. Next, we present the generic mechanisms behind document generation, regardless of the language, method and tool used for your model. Then we suggest a method in 3 steps to generate a document. Finally, to make things concrete, we illustrate document generation in the frame of an identical system model formalized with 2 different languages and tools, to show that we can reach the same results independently of the tool used. For this practical demonstration we have chosen two combinations of languages and tools widely known and used by the MBSE community:

This will also demonstrate that the document generation can be done almost independently of the method chosen to model your system.

First, let us imagine a world where everyone would be trained to read and write models just as we learn to speak natural languages. We could assume that, at some point in time, models would replace most of the documents exchanged to define, build, verify and validate a complex system.

However, in the current industrial reality, this situation is not likely to occur for decades, if at all. Eventually, within the system definition team, we can assume that team members work with models and consider models as engineering artefacts, from which they are able to derive functions, requirements, components, interfaces, parameters, etc., and from which the team (the same one or a different team) can perform verification and validation. But systems engineering is far more than the isolated work of one system definition team. There are a lot of exchanges with many other teams, including:

These other teams do not always understand the modelling language used by the system definition team, and they do not always need to access to the whole model. Hence, it is key to be able to extract a consistent set of model information and share it in a more commonly known language. This is where the document comes back on the scene.

Note: Here we use the term “document” in an inclusive manner to refer to different types of static media, including text, tables, drawings, etc.

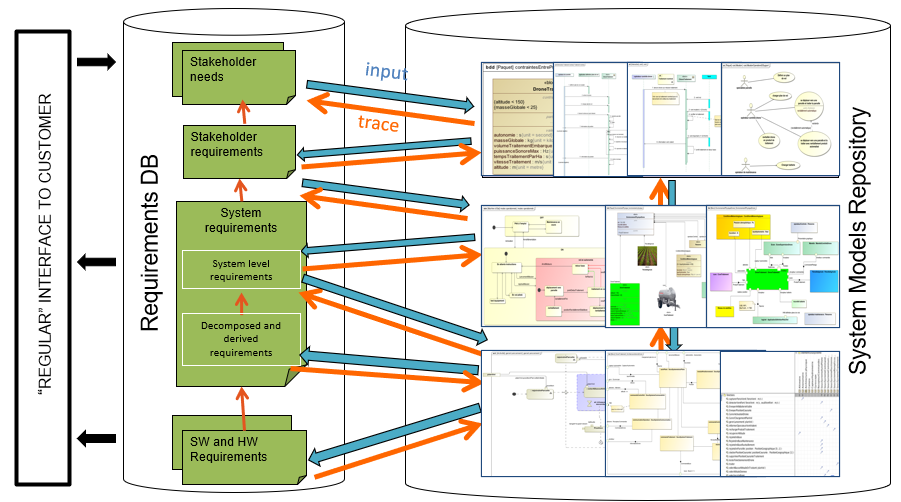

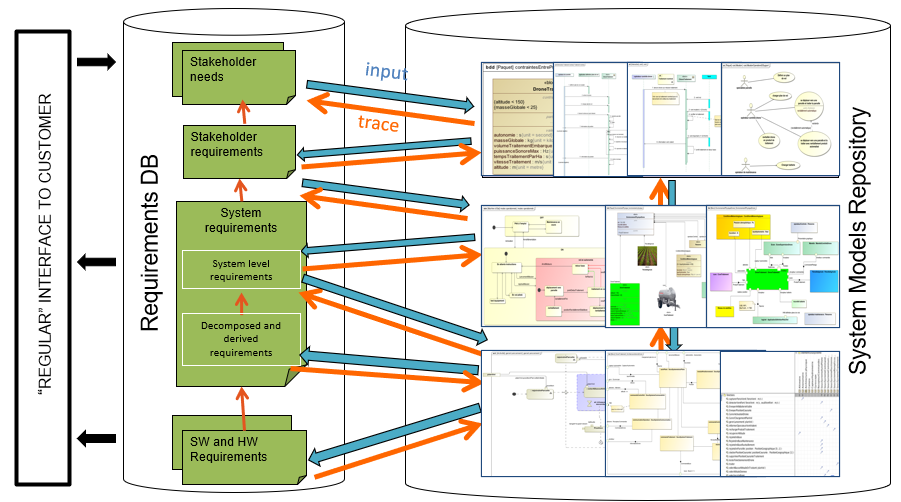

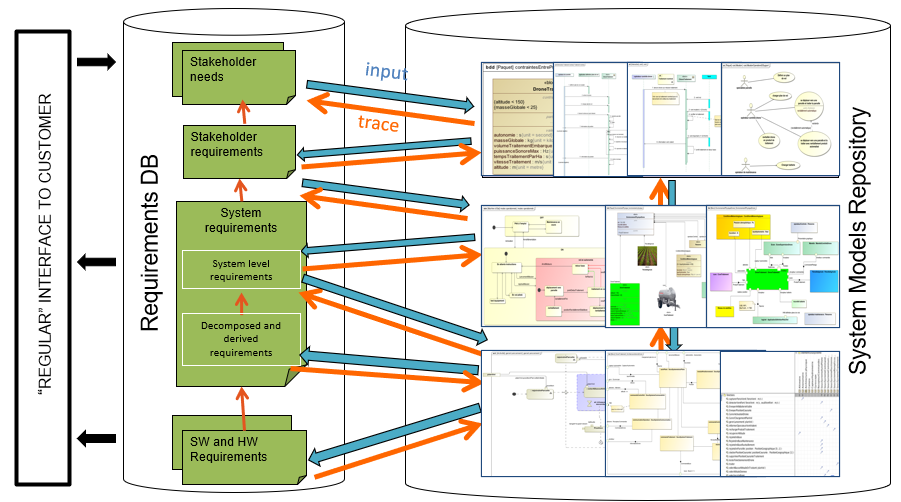

For many years, Systems engineers will need to export parts of their model in document formats to allow people not trained or not trained enough in the system model notation (SysML, Arcadia or any other formalism) to understand and analyze the information contained in the model. The goal should be for the model to remain the central repository, the source of truth for all the actors of the project, with exported views adapted to the unique needs of each actor or team involved with the project.

Once we are aware that we need to share documents with other teams (and sometimes within the same team), the central question becomes:

“Shall we build those documents manually or generate them from our model?”

This may sound like a silly question, because it seems obviously useful and time-saving to generate the documents. But document generation is long to set up and it requires a lot of effort to get familiar with the document generation approach and the associated tools. For a project with strict deadlines and a limited budget, investing in document generation is not an obvious solution.

In the short term, if the project has short and strict deadlines for the first deliveries, and if the question of generating documents has not been anticipated, the answer is quite simple: “Do not try to generate your documents from your model”. Creating a document (architecture, interface, specification, verification plan, …) will always be faster by hand the first time. You start from a template or from an existing document that stems from another project, then you copy and paste some diagrams, you extract some requirements from your requirements database, and you complete with it with some explanations and drawings. It takes time to arrange the information, time to review the document and to check the completeness and global consistency, but you know you will succeed in writing this document because you know what information to include and where to find it. If you have only just discovered document generation, you do not have any idea of the time you will need to set up everything required for the document generation to work, so it adds a lot of stress if you are short on time.

After a few iterations, as your system definition evolves, you will probably need to update your document (architecture, interfaces, …). You may realize that it becomes tedious to identify the parts of the document that must be updated and to perform those modifications. Especially if those modifications have already been performed in the system definition model. In this case, you have two repositories that share the same information: the model and the document. So now you spend time reflecting any changes in both places, with most of the effort spent to lay out the information, and with risks of inconsistencies between the two repositories. This time is not spent on engineering, but on synchronization.

After a few iterations it becomes very clear for everyone on the team that this double update is expensive and does not bring any value. This is where there is a high risk: give priority to the document…

and lose control of the model.

At Samares Engineering we have a lot of experience in using models. We know that when the project is operational, when there are strict deadlines to conform to, the pressure on the system team regularly rises to deliver some documents because those documents are contractual. Priority is given to their delivery and when time is in short supply, some changes are reflected directly and only in the documents. The synchronization between the model and the documents is not maintained. The consequence is simple: the model becomes progressively obsolete and loses most of its value because it does not reflect the current problem or solution space. The reference is now the document and no longer the model. This is the end of the value of the model. A good example of failure in MBSE. And there will be people who ask you why you spent so much time on building a model…

When looking at the long term, generating documents from the model is the only option.

You know that you cannot afford maintaining the same information in two repositories. If you really want to use models to support systems engineering, then your documents must be deduced from the model, which requires some automation: generate document(s) from the model. Now you know and can prepare your project team 😉

From our experience, the main kinds of documents generated from a system model concern the system architecture: list of functions, functional breakdown, functional architecture, components, Product Breakdown Structure, interfaces, functional behavior, automatons.

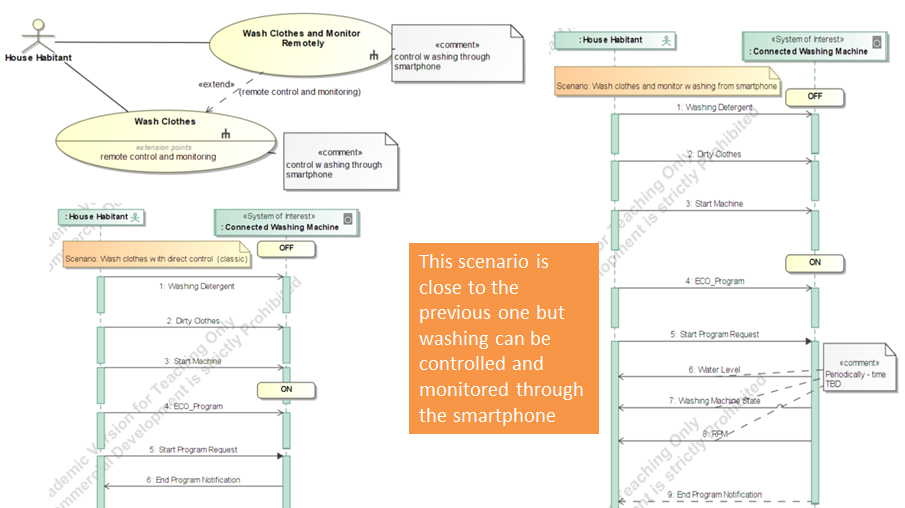

We also often find the description of needs and expected functionalities: use cases, context diagram, external interfaces, scenarios, some state machines, and the generation of Interface Control Documents, either as text (word documents) or as excel sheets.

Note: we can also use the document generation capabilities to extract views not defined in the model, as long as the information is included in the model. For instance, we can build tables that relate use cases and requirements with test procedures described as sequences or state machines to give metrics to the project manager on the traceability.

Some tools, like Cameo Systems Modeler, have the capability to compare two versions of a model and to generate the differences between them in a document. In this way, document generation can be a tool for analyzing the evolution of the models between 2 versions.

Finally, any information stored anywhere in the model, can be put into the generated document. The customization of templates makes it possible to adapt the generated document to the specific needs of your company, project or team.

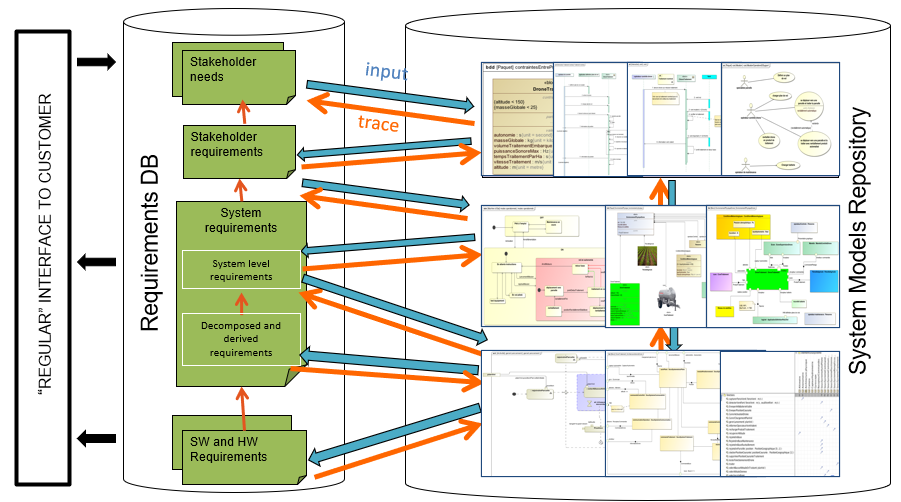

The ability to generate documents is not native to any modeling language, but to the tool used for the modeling. Typically, a template is written, containing queries that specify where in the model some specific information can be found, and how this information should be presented in the document. This template in combination with a model is then processed by a document generation tool, in order to produce the generated document. As mentioned in the introduction, we will focus on the modelling tools Cameo Systems Modeler and Capella, which each use a different document generation tool.

The principle, as illustrated in the figure above, is the same no matter the tool used. The template and the model must be constructed respecting the same metamodel, and the same modeling rules. This is the only way to ensure that the correct information can be found in the correct place in the generated document. For languages like Arcadia/Capella, that have less customization options, the same template can possibly be used across several different projects, with minimal adaptations. For languages like SysML, that are extendible by nature, and where different extensions (profiles) may exist within the same company in order to address different specific cases, the templates may require more rework in order to be reused. However, if this is known in advance, it is possible to make the template compatible with all the different extensions (profiles) from the get-go. This will require more of an effort in the beginning, but less maintenance than having several templates to maintain.

All this depends on the specific needs of your company and the different teams within your company.

In Cameo Systems Modeler, the document generation tool is called Report Wizard. It is a technology developed specifically for Cameo Systems Modeler and comes natively with this tool. Report Wizard is based on Velocity, a Java-based template engine. It requires templates to be written in Velocity Template Language (VTL) (Velocity User Guide).

To generate a document from a model using the Report Wizard, it is enough to open the Report Wizard from the model that the document should be generated from and selecting the desired template. Just like any other wizard, it guides the user through the steps of the documentation. Cameo Systems Modeler comes with a fairly wide selection of templates, though in order to obtain a document that is truly useful, custom templates will be necessary. However, these existing templates do give good starting points for developing custom templates.

The Velocity Template Language is written in plain text directly in the template document, where the formatting applied to the template queries (code) reflects the formatting in the finalized generation.

In VTL, each variable is prefaced with “$”, and each command line to be executed starts with “#”. Any lines of text not prefaced with “#” will be reproduced in the generated document, though any variables (starting with “$”) will be replaced with their value.

In addition to the basic queries and operations native to VTL, some helper modules and special variables have been developed specifically for use with Cameo Systems Modeler, that make some of the information in the model much easier to access. For instance, the variable $elements is a list of every single element that exists in the scope of the model selected for generation, and the helper module $report makes it possible to obtain a filtered list of elements.

For example, if your model looks like this:

Using this template:

Will give this result:

VTL can be used in many different formats, including Word, Excel, PowerPoint and html. In this article, we only focus on the generation of Word documents.

Capella does not have a natively integrated document generation tool. However, since M2Doc (M2Doc reference documentation version 3.1.1) is a technology developed to work with any Eclipse Modeling Framework (EMF) -based model and Capella is based on EMF, the two are compatible. The M2Doc plugin must be installed separately, as it is not natively delivered with the Capella installation. M2Doc requires a Generation Configuration file (.genconf) to serve as the “glue” between the template file and the model. Wizards exist to guide the user in creating the Generation Configuration file and the Template file. Only one template example for use with Capella is available, developed based on the In-Flight Entertainment system (IFE) example by OBEO. This template is not likely to be useful for a company as-is, but it provides a good starting point for developing a custom template.

The template language used with M2Doc is the Acceleo Query Language (AQL) (AQL documentation), which in turn is based on the Object Constraints Language (OCL) (Object Constraint Language Specification Version 2.4 – omg.org). There are no Capella-specific helper modules or variables that exist, since M2Doc and AQL are generic to all EMF-based models, but in Capella there is an interpreter view that makes it possible to see the result of any AQL query immediately and thus is very helpful when writing the custom templates.

In AQL, the variable name “self” is used to refer to the current object. When using the Interpreter view, “self” refers to the currently selected element, and it changes dynamically as you click on different elements. When writing the template, this works differently. You need to declare a variable in the template properties (it is possible to declare several) that will serve as the starting point for exploring the model. It is generally recommended to name this variable “self” and to set it to the System Engineering element. This element is the top-level root element, just below the .aird, and it is obligatory in all Capella models.

M2Doc is currently only developed for Word documents. The AQL queries are included in code fields within the Word template, and the formatting applied to the queries reflect the formatting of the result. Each code field starts with “m:” to signal that this code field should be interpreted by M2Doc. Standard Word code fields, such as ones used to calculate the page number or the figure number, are still compatible and should be used just like in any regular Word document.

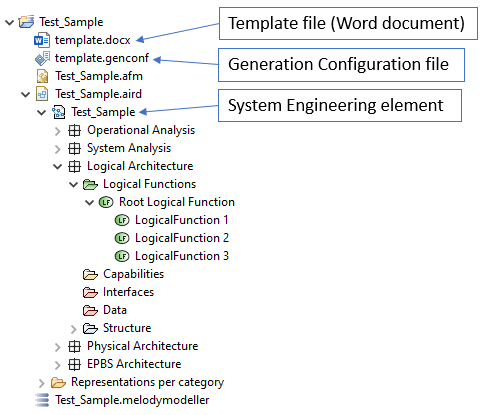

As an example, if your Capella model looks like this:

Example of a Capella model, where the template (Word) file, the Generation Configuration file and the System Engineering element are indicated.

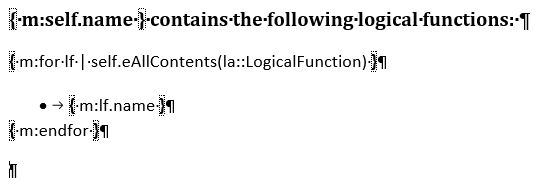

And your template (Word) document looks like this:

Example of M2Doc code. The variable “self” indicates the System Engineering element in the model. This code collects and lists all the Logical Functions that exists anywhere below the System Engineering element.

Then the result of the generation will look like this:

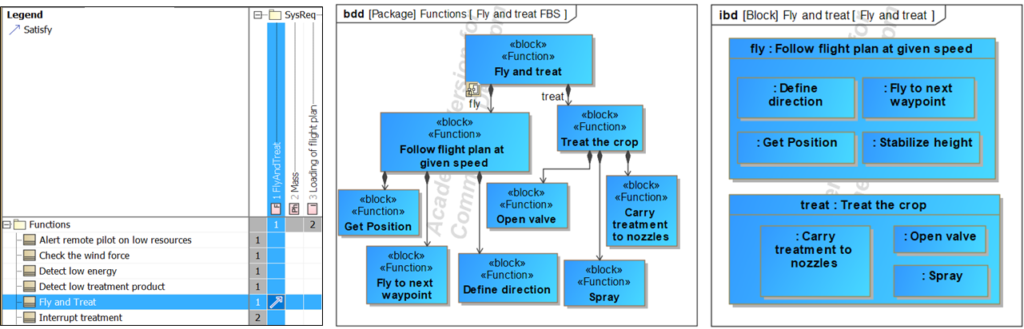

To show off the capacities of both modelling tools, we present here the same system modelled in a similar manner using both Cameo Systems Modeler, and Capella. This system is an Unmanned Aerial Vehicle (UAV), also called drone, dedicated to treat fields against pests or illnesses. For this article, we focus on the logical architecture, with functions and requirements allocated to the logical components. We chose to focus on this layer in this article to show how to use the model to generate a preliminary specification for one or several components of your system. This specification can, once it has been completed, be provided to a sub-contractor, or to a team internal to the company, responsible for buying or making this component.

Note that we are talking about a preliminary specification. Most companies that write specifications have some sort of template to serve as a support for writing new specifications, and the exact contents of the specification will vary from company to company. However, it is common for there to be an introductory part with information about the project context for the specification, to help the reader better understand the component being specified. While some parts of this context can be included in the model (name of the global system, other components or external actors that interface with the specified component, etc.) other information such as contact information and referenced documents are rarely included in the model. While it is technically possible to include ALL the information in the model, this can make the model hard to maintain, and the system model is not necessarily adapted for this kind of information. We recommend using classic tools for all information that does not naturally belong in the system model, and completing the partially generated document by hand after it has been generated.

We will generate a preliminary specification document from each of our models in order to illustrate one of the uses of document generation.

In the next paragraphs we present a method to setup the document generation.

Note: This method is illustrated with the specification document generation, but the method applies to any kind of document to generate.

The first step in the document generation setup process is figuring out what we want to display in the output document.

In our case (the system specification), most of the time the system team knows what information to include because specification documents are used in most projects and there is often a Word template defined by the company to guide systems engineers to ensure that all the key information will be filled (or marked as not applicable). This step then consists in taking the template document and using it to describe precisely what we want to display in each chapter and with which layout: list, table, image… It is a good idea to use examples of information from previous projects (modified if confidentiality applies) so that we can have a good idea of the contents and the layout of the target output document.

For documents that are less common or project-specific, where there is no pre-defined template to base the generation template on, this step will consist in identifying all the information that we want to see in the generated document and to determine the layout of this information. Examples remain useful to ensure everyone understands the same thing and agrees on the final result.

We can separate the contents of the generated document into two categories: static and dynamic. Static content remains the same no matter the model it is generated from, and is included in the generation template in the same way it would be included in a regular template. This content typically includes the cover page with the company information and logo, as well as (some) titles, a table for the revision history, etc. Dynamic content is content that changes based on the model it is generated from. In other words, it is any and all information obtained from the model.

In this second step, we focus on the source of the dynamic information to display in the document. We assume that the static information is already put in place in the template document based on the information obtained in step 1.

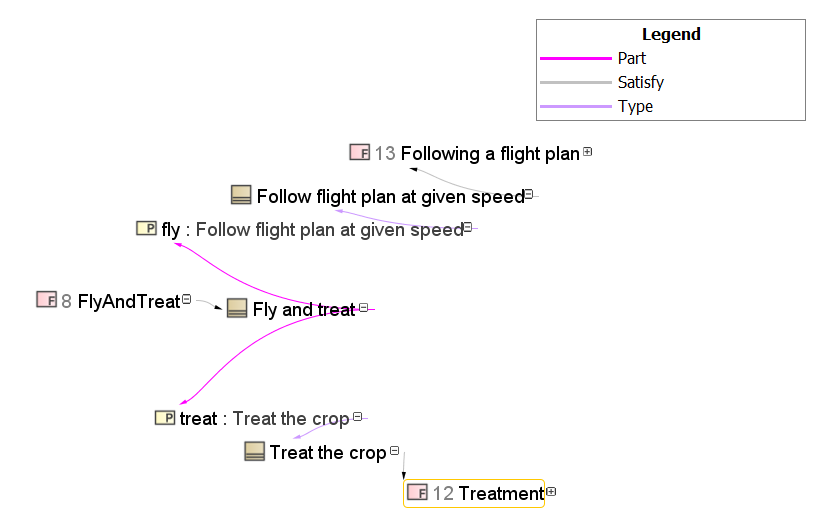

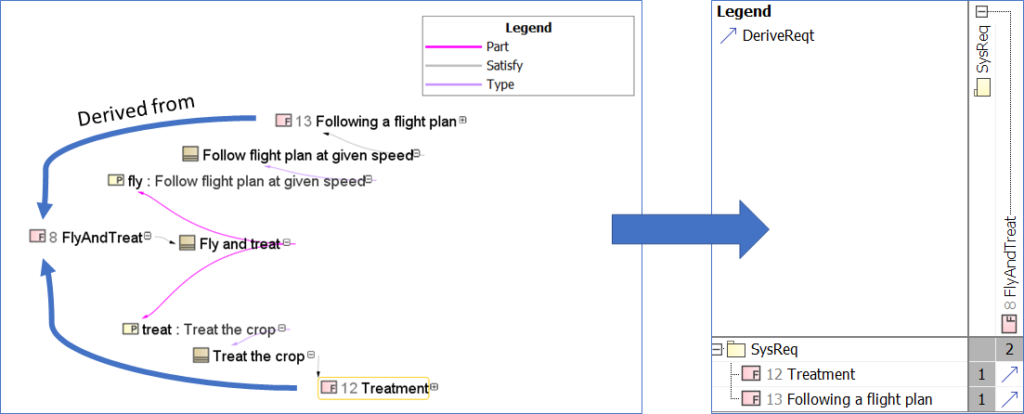

For all the different dynamic information (use cases, scenarios, context, functions, requirements, interfaces, components, traceability links….), we first have to identify if this information is present in the model. Sometimes we face a case where parts of the dynamic information we want to include is not present in the system model. For instance, in the specification document we might want to display the traceability to the customer requirements, but our system model does not contain the customer requirements. These are instead stored in a dedicated requirements database. In this example, we realize that the dynamic information we want (the traceability links to customer requirements) is not available in the system model. In this case, there are two solutions; either we complete our model to contain ALL the dynamic information required for the specification document generation, or we need to build our document from several sources (example: one model + one requirements database). This last option generally means generating a partial system specification and completing it manually after generation with the information that is missing.

In this first article about document generation, we want to keep things simple. For the rest of this article, we assume that all the required dynamic information is available in our system model.

Once you have confirmed that all the dynamic information is present in the system model, you have to identify how to access this information from the model.

Let us see an example:

We see here that we need formal rules to identify the information in the model. If customer requirements exist at different places in the model and cannot be easily identified (by an attribute, a tag or a finite list of locations) the document generation cannot be automated.

The mapping of dynamic information to model data requires formal rules (also called “queries”) to identify the model data. If the rules are unclear or ambiguous, the document generation is likely to retrieve the wrong data or miss some of the data that should be included.

What kind of credit can you take from your document if you cannot trust the document generation and need to verify that the output document really contains what was expected?

In this step we write out the formal rules (queries) used to obtain the dynamic information from the model. The language used for these queries depend on the document generation technology used; in our case it is VTL when generating documents from Cameo Systems Modeler, and AQL when using M2Doc to generate documents from Capella.

There are different strategies that can be applied when writing queries for the template, independently of the language used. One strategy is to write the different queries as independently as possible from each other. This strategy has the advantage of allowing the work to be distributed between different people and teams, and also makes reuse of parts of the templates easier. However, depending on the structure of your document and how the different dynamic information correlates, it is not always possible in practice.

The formatting of the final output document is also done in this step. This is because, for most template languages including both languages presented in this article, the formatting is applied directly to the query. If you want to present the information obtained from a query in a table, then you put that query in a table. If you want the result to be in bold and / or in italics, then you apply bold and / or italic formatting to the query. Since the formatting (layout) and the queries are so linked, it makes no sense to separate the two.

Sometimes the company template document addresses the presentation and provides guidelines for how the different information should be laid out. However, these rules often change when using a model to generate the document, as the model comes with diagrams that may replace some textual information.

This step can take a lot of time if not prepared in advance, especially if the reader needs the first version of the document generation of the model to get an idea of the output and then change their mind on the presentation. This is a bad practice as it leads to a lot of iterations in the document generation and makes it very sequential and time consuming.

A better practice is to discuss the presentation in step 1 with dummy data, so that this “presentation” of data can be completely specified in step 1.

Both Cameo Systems Modeler and Capella (+ M2Doc) have similar document generation capabilities when it comes to generating Word documents. They are both able to extract diagrams and any information from the model and formatting it as paragraphs, lists, titles or tables, with any style.

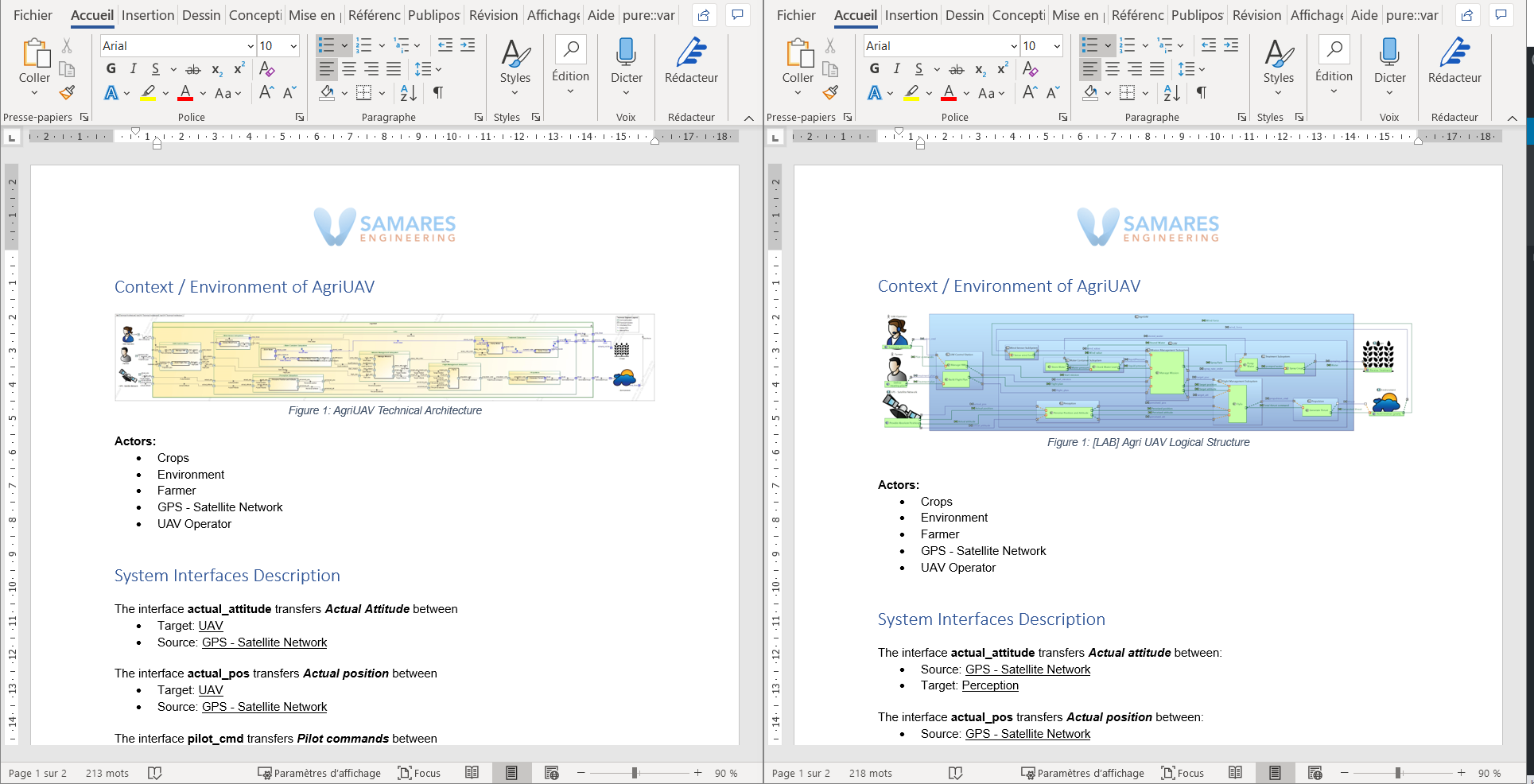

We created one template for each tool, containing the same dynamic information presented in the same way with the same formatting.

View in Word of the two generated documents side by side. On the left is the document generated from Cameo Systems Modeler, and on the right is the document generated from Capella.

In the following chapters we focus on some extracts of each of the two documents generated from the two different tools. There are some minor differences between the two documents, as we will see.

As an introductory chapter, we extracted the above seen diagram image, and created a list of the external actors. Except for the fact that the title of the diagram, and of course the diagram itself, is slightly different, this chapter is identical for both generated documents.

In this chapter we list the external interfaces of the AgriUAV (interfaces that crosses the border of the UAV), with their source, target, and the flow that they transport.

Note: This is just an example and is extremely simplified. Generally, a specification would also include information about the flow type, specific interface constraints, etc. For this article, we have decided to privilege the simplicity simply for presentation and readability purposes.

At first glance this chapter is identical, but there are some minor differences between the two due to the differences in the two modelling languages used. In SysML, the connector is direction-less; it cannot be used to determine the direction of the flow, so we have to look at the direction of the port to figure it out. For this reason, it is easier to have the “source” and the “target” in a random order (we obtain both ports and list them; an “out” port is a source and an “in” port is a target). In Capella, the component exchanges have a source and a target directly available in the properties, making it easier to obtain this information in a fixed order.

Furthermore, in SysML we have the possibility to use delegated ports, that is, a port that is not a final end but an intermediary port at the border of a block that contains other blocks. In Capella, this kind of port is calculated if we decide to hide some internal parts of a component, for instance, but it does not exist as a standalone modeling element. Because we decided to use these delegated ports in our SysML model, it is easiest to obtain just the first level of the block inside the AgriUAV, instead of the final end port, when generating from Cameo Systems Modeler, while it is easier to obtain the final end when generating from the Capella model. This is why the target for the “actual_pos” is listed as UAV in the document generated from Cameo Systems Modeler, while it is listed as “Perception” in the document generated from Capella.

We could have obtained exactly the same result for both generations, either by altering the code in the Report Wizard template used by Cameo Systems Modeler or in the M2Doc template used by Capella. We could also decide to model our connection directly from end-port to end-port in SysML, without using the delegation port, to obtain the same result as with Capella. However, for this demonstration, we found it more interesting to highlight this difference, as one solution is not necessarily better than another, and it ultimately comes down to how this information will be used.

This chapter lists the direct components of the AgriUAV, with their directly allocated functions and requirements. For this chapter, there are no differences between the document generated from Capella and the one generated from Cameo Systems Modeler.

This chapter shows that we can obtain the same structured chapter for multiple similar elements. In this case, there is a header for each component (obtained dynamically), with two sub-headers, one for allocated functions and one for allocated requirements. In this example, it is the same code that has been used in both cases (applied to a collection containing both elements), but it reacts differently if the query returns empty or not. We also see that we are able to format the obtained information as a table, if we so choose.

When looking at the three parts of this generated document, it might seem poor in terms of information. This is true; there is a lot of information we could have chosen to include, but for a demonstration in an article, we have to limit ourselves. The goal of this article is not to show what you need to include in a specification, but rather to demonstrate the capabilities of the document generation tool and inspire you to come up with your own templates!

As explained, document generation is a key feature when using models for systems engineering. When ready (automated), this transformation reduces the time and efforts needed to build one or several documents and allows other teams to access key up to date information about the project without needing to read the model.

However, the effort required to set up the document generation must not be underestimated, as it is not negligible. There are several steps needed to reach the first generated document, the first one consisting in determining exactly what the result of the generation should be when it is not already defined from an existing template. Document generation requires formal rules (queries) to extract information from the model and the related constraints can sometimes lead to changes in the model structure or in the way we store information in the model.

While the creation of custom templates requires some work, the result is reusable across all similar projects, which will benefit future projects (if the documents are similar).

Both Cameo Systems Modeler and Capella have good capabilities for generating Word documents and makes it possible to reach the same level of presentation (layout) in a Word document.

For beginners, adapting an existing template is generally easier than creating one from scratch, at least to understand how it works. For more experienced users, it is better to focus on reuse: templates can be improved to contain parameters and alternatives that allows the use of the same template in different contexts.

Document generation makes it possible to draw even more benefit from the model, by the vast customization possibilities that exist.

Enjoy MBSE!

This article is part of a monthly series entitled “Advanced MBSE with SysML and other languages“.

In this article 9, we explain how to distribute some sub-systems of a logical architecture to a set of suppliers and to integrate and co-simulate their behavioural models in the SysML tool with the FMI standard.

This is the first article about using the FMI standard for co-simulation, but not the last! There is a lot to say about using FMI, and this article 9 can be considered as an introduction on using FMI in extended enterprise, through a fairly simple sample case. At the end of this article, we mention some challenges and advanced topics that we plan to work on during 2021, and this work will lead to other articles.

In the previous articles (part 1 to part 8), we introduced a method based on the SysML notation to support the following systems engineering activities:

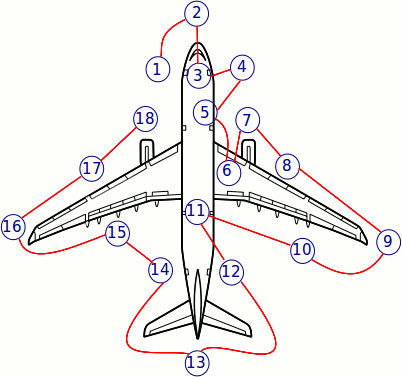

This article starts with the availability of an Aircraft Inspection By Drone Assistant (AIDA model inspired from the IRT St Exupery case study) logical architecture. It is illustrated below:

The different subsystems (logical components) will be assigned to different suppliers. We expect each supplier to develop a behavioural model of the assigned subsystem before developing the real subsystem. The idea is to integrate all the behavioural models provided by the different suppliers into a central repository, and to co-simulate all those models (simulate all those models concurrently) to evaluate the global behaviour and validate that it behaves as expected. We will use the FMI standard for co-simulation. This is explained in the next paragraphs, before we show the practical steps for integration and co-simulation of the behavioural models through FMI.

The Functional Mock-Up Interface (FMI) is a standardized interface to exchange dynamic models issued from various simulation tools and package the model as a combination of an XML model description file, executable binary files and eventually C code files into a single zipped file.

This makes it possible to extract models from several different simulation tools, and integrate heterogeneous models into a single simulation tool and provide the model as a “black-box” for preservation of intellectual property when it is required.

In the next paragraph we present the model description file and the principles of FMI for both model exchange and co-simulation, with a particular focus on co-simulation.

The modelDescription file structure is presented below:

This approach is proposed to extract model data from a simulation tool without its solver. It is then possible to re-integrate the extracted model in another tool where an appropriate solver is available.

Note: As our article will focus on FMI for Co-Simulation, we do not go into detail on the Model-Exchange principles in this article. If you are interested in more details about Model Exchange, please refer to the FMI Standard.

This approach makes it possible to extract an executable simulation model from a specific tool. The executable is then used as a component library to be integrated in a wider environment. This mode is particularly useful to integrate several models coming from different suppliers to evaluate the overall consistency of the complete system.

The Master Algorithm executes individual FMUs at regular communication time steps (hC) and propagates the model outputs to their connected models. This mechanism is called by standardized FMI APIs as illustrated below:

As explained in the introduction of the article, we want to perform early Verification and Validation of system requirements and verify the global behaviour that is split between the different subsystems. We distribute the subsystems definition models to different suppliers (specialists of different domains) and request them to provide a detailed behavioural model that is compliant with the FMI standard.

This process is illustrated in the figure below:

We collect the different models provided as executable files (“black-box”) to preserve the supplier Intellectual Property (IP). From the integrator side, we can now integrate those potentially heterogeneous models (i.e.: developed with different simulation tools and solvers) into a single tool and verify the overall execution of the different models and their interoperability. This is possible thanks to the FMI standard that ensures this interoperability.

Note: This article briefly presents the co-simulation of different logical components developed in a well-defined sequence from mature specifications. In industrial reality, it may happen that the subsystem behavioural models are developed concurrently with the system architecture definition. We do not give details about the full industrial process of exchange with suppliers and gap analysis for alignment with definition models when both definition and detailed behaviour have been defined concurrently. If you want to know more about this industrial process, you can look at our CSDM 2020 conference joint article with Renault: “Applying Model Identity Card for ADAS V&V“.

In our example we use the AIDA Unmanned Aircraft Vehicle System. The logical architecture of the UAV System is shown in the figure below:

It is possible to define verification criteria based on requirements formalized by SysML constraints, as illustrated below for controller accuracy (0.5m):

Now we provide specifications to our suppliers (including a SysML model of the context of each subsystem and requirements on the expected behaviour). Suppliers will have to develop the behavioural models.

Note: we illustrate the full process for only two subsystems (UAV Control station and UAV system) and one external system (Air gravity) to limit the size of this article. But what is shown can be applied in the same way to many other subsystems and external systems.

This actor is in charge of developing and to providing the behavioural model of the UAV Control Station with a dedicated focus on the Build Flight Plan function behaviour. In this example, the UAV Control Station Model provider uses the MATLAB / Simulink 2019b tool and wants to provide the UAV Control Station behavioural model as a “black-box” executable model to the System Integrator using the FMI for Co-Simulation 2.0 export capability integrated in MATLAB / Simulink.

This actor is in charge of developing and to providing the behavioural model of the UAV System and in particular of the UAV Control Position and attitude functions behaviour. This behavioural model is developed using the MATLAB / Simulink 2019b tool and is provided as a “black-box” executable model to the System Integrator using the FMI for Co-Simulation 2.0 export capability integrated in MATLAB / Simulink.

This actor is in charge of developing and to providing the behavioural model of the UAV Environment, especially of the Air / Terrestrial Gravity. This model is required to model the effects of the external environment on the UAV System. We suppose that OpenModelica v1.16.2 is used to develop these models and to export the behavioural model using the FMI for Co-Simulation 2.0 export capability integrated in OpenModelica.

This responsibility sharing is summarised in the figure below:

Each supplier shall develop its behavioural models and define its associated interfaces:

When a supplier has finalised the Simulink behavioural model and has tested it through simulation, it is then possible to create a MATLAB/Simulink project and share its contents as an FMU for Co-simulation:

When a behavioural model has been developed and simulated successfully on the supplier side, the Modelica model can be exported as an FMU:

The supplier should configure the FMI options in the OMEdit configuration window:

Finally, the supplier can export Functional Mock-Up Unit for Co-Simulation.

When all the Functional Mock-Up Units have been received from suppliers as “black-box” executable models, the System Integrator can assemble these models. We can use the Cameo Systems Modeler tool and the Cameo Simulation Toolkit features to verify interface compatibility and the overall behaviour execution.

To perform this action, Cameo systems Modeler (CSM) offers a drag & drop feature of an FMU into a SysML IBD (Internal Block Diagram) and proposes the following import menu:

When all the FMUs have been imported into the Cameo Systems Modeler project, it is possible to assemble them together and check the consistency of the interfaces in regards to the established specification:

In order to simulate this model, it was necessary to “break” the feedback loop between Air Terrestrial Gravity and UAV System. This was done by inserting a “delay” component, which introduces a discrete delay (1/z) configured to 1 communication step size. This kind of annoying effect may appear depending on the tool used for FMU integration and on the underlying co-simulation master algorithm. We propose to address this topic in more detail in a future article.

Next, the System Integrator is in charge of configuring the Simulation execution and especially the communication step size and the simulation duration. This configuration is done in the Simulation Configuration Diagram, as illustrated below:

Now, before starting the simulation execution, it is necessary to launch the MATLAB Console for each MATLAB/Simulink FMU (at least for MATLAB/Simulink 2019b models) and execute the ShareMATLABforCosimFMU command (where communication between MATLAB FMU and MATLAB runtime is required).

During simulation, the results can be observed from plots available in Cameo Systems Modeler, and it is possible to verify requirement compliance using co-simulation results inside Cameo:

In the execution results, we can observe that the requirement (constraint) concerning the maximum error of 0.5 m between the target position and the real position is verified for the Y coordinate (constraint is respected, in green) but not for the X coordinate (constraint is violated, in red).

This approach makes it possible to detect requirement violations during co-simulation execution, which would have been very hard to detect without co-simulation, or that would have been detected later in the product verification, perhaps too late…

In this example, we have illustrated the capability to perform execution of heterogeneous FMUs (Modelica and MATLAB/Simulink) and co-simulate their execution within Cameo Systems Modeller. This capability allows for Systems Verification while collecting Systems artifacts behaviours from suppliers as Functional Mock-Up Units.

Then, the Systems Engineer can integrate these models in a co-simulation environment and define the appropriate communication time-step (hC) size for the FMUs communication. To define the appropriate time-step size, the Systems Engineer should consider the overall expected parameters, such as the overall simulation time, and signal and behaviour dynamics / periods.

In this article, the initial model was initiated manually from the SysML model. However, it would be possible to have a seamless code generation of Modelica partial models from the SysML models. Indeed, since partial models play the role of specification (interface contracts), it would be possible to adapt the approach to take into account the full process of configuration and change management.

Next, as Cameo Systems Modeler offers FMI co-simulation capabilities, it would be possible to generate and assemble FMUs from the Modelica models produced for some subsystems with FMUs generated from Simulink models (for the control subsystems). This would make it possible to characterize the co-simulation architecture from the logical architecture.

Then, it should be interesting to explore the usage of FMI standard companions such as System Structure & Parametrization (SSP) which supports standardization of co-simulation graph and configuration and Distributed Co-Simulation Protocol (DCP) which supports the standardization of communication protocols for co-simulation distributed on several execution nodes such as computers.

Finally, we plan to contribute to different initiatives (like the AFIS/NAFEMS working group) that aim at bridging the gap between system definition models developed by system architects with an MBSE approach, and detailed behavioural models sometimes called “simulation models” developed by domain specialists. The idea is to leverage the integration of those different models to address different purposes including feasibility, evaluation of performances, verification of system requirements and validation of expected behaviour.

We consider the use of the FMI standard as a key practice to leverage the MBSE approach in extended enterprises. In future articles we plan to address the following complementary topics:

Enjoy MBSE !

This article is part of a monthly series entitled “Advanced MBSE with SysML and other languages“.

In the second set of articles, this series explains how to complete the top-level system definition model, formalized in SysML, with other modeling languages and tools, considered as more efficient to perform the system detailed design or certain kinds of system analysis. The focus is put on digital continuity with guidelines concerning coupling semantics and coupling automation between languages and tools.

In this article 8, we present an approach to refine the system definition into a multi-physical specialized architecture with the support of the Modelica language and associated toolbox.

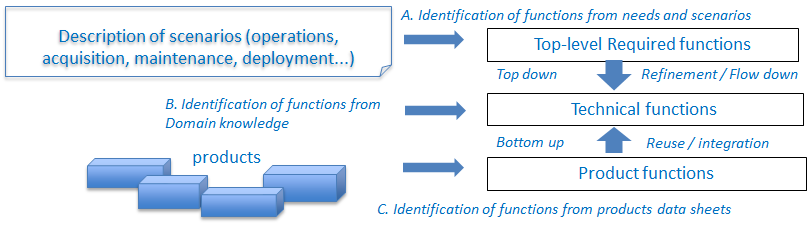

In the previous articles (part 1 to part 5), we introduced a method based on the SysML notation to support the following systems engineering activities :

SysML focuses on abstraction, requirements, functional decomposition, systems decomposition, allocation, and traceability. Within SysML, and especially in its implementation with Cameo Systems Modeler, it is possible to perform simulation and animation of state machines, activity diagrams, IBDs, or sequence diagrams, and evaluation of parametric diagrams. So, we will show that we can complete the SysML definition with Modelica concepts and use the Modelica toolbox to perform analysis and assessment of the architecture, refine our knowledge and the system specification.

This article starts with the availability of a UAV logical architecture for the agricultural domain . It is illustrated below:

Modelica is an object-oriented and equation-based language dedicated to the modeling and analysis of multi-physical systems. It is a defined by the Modelica Association. The Modelica language relies on both graphical and textual syntax. It makes it possible to combine Differential and Algebraic Equations (DAE) with discrete event systems. This language is well suited to represent flows of energy, signals, or materials, and any continuous interactions.

With Modelica, it is possible to create architectures made of sub-models connected by ports (undirected physical flows and directed signal flows). Models can be causal or acausal, and can represent hybrid systems (discrete and continuous). A good amount of free or commercial Modelica libraries are available for domains such as chemistry, automotive, neural network based AI, etc. The following figure shows examples of signal based components (integrator, PID, …) and physical based components (magnet, spring, tank, …) provided by Simulation X.

To illustrate the full approach, we use a simplified model of an Unmanned Aerial Vehicle (UAV) for agricultural domain. We use the following languages and tools:

The goal is to provide a physical solution for the “Water Container Subsystem” and for the “Treatment Subsystem”. The following figures show the Modelica elements that will be used to design the physical solution of each subsystem.

First we start with the following logical architecture made by a systems engineer.

In this article, we focus on the following requirements that shall be satisfied by the final architecture. Each of these requirements specifies the valid definition domain for the variables to be observed.

Note that in some of the requirements (req 3 and 5) we have introduced the notion of derivative. This requirement specifies that when the treatment subsystem is requested to stop, the flow shall continuously decrease. This is illustrated by the example of the following figure. All the curves are valid except the red one, which has a positive derivative at some point in time.

The first step is to translate the functional/logical architectures defined in the SysML language toward an initial Modelica architecture in order to focus on the “Water Container Subsystem” and “Treatment Subsystem” with a language well adapted for the formalization and simulation of physical phenomena. This step results in a Modelica model containing Modelica partial models. The main advantage of using partial models to represent logical subsystems resides in their ability to be implemented using variants. Therefore, partial models can be seen as interface contracts that shall be respected by the engineers. Then, each individual model can be implemented with different architectures: this is what we will see next.

In order to perform this translation, each Subsystem (SysML Block) is converted into a Modelica partial model. Each information flow is transformed into a Modelica Signal input or output and the hydraulic flow is converted into hydraulic ports and connectors in Modelica. We have used the SysPhS library (SysML Extension for Physical Interactions and Signal Flows Simulation) to type the ports. This library is available in Cameo Systems Modeler SP4 and has been specially built to specify physical and signal flows independently of the targeted simulation platform. Also, using this extension makes it possible to generate Simulink or Modelica models directly from the SysML model.

| Systems Engineering Concept | SysML Concept | Modelica Concept |

|---|---|---|

| Function | Block | Partial Model |

| Technical System Element | Block | Partial Model |

| Data Port | Proxy Port + Interface Block (SysPhS Signal Interface) | Partial Model |

| Trigger Port | Proxy Port + Interface Block (SysPhS Signal Interface) | Signal port |

| Enable/Disable Port | Proxy Port + Interface Block (SysPhS Signal Interface) | Signal port |

| Energy Port | Proxy Port + Interface Block (SysPhS Physical Interface) | Physical Port |

| Functional Flow | Connector | Connect equation |

To design the “Liquid container subsystem” and the “Treatment subsystem” we will use the following elements from a hydraulic library available in Simulation X.

Using an available hydraulic library, we perform the following design for the “Water Container Subsystem” and the “Treatment Subsystem”. In addition, we build mock-ups for the other components in order to support simulation (this is not presented here).

The simulation results of this first design allows us to see if the requirements are satisfied or not.

At t = 70s, the stop command is received, then the volume flow (left side) and the pressure (right side) start decreasing. We see that the volume flow can not reach 0.001 l/min in less than 0.5s. Also, the pressure can not reach 0.02 bar in less than 0.5s.

From the simulation results, we can see that the first part of the requirement 2 (“Volume Flow Stop Perf 1”) and the requirement 3 (“Perform Stop Perf 1”) are not satisfied. Indeed, the spray does not stop in less that 0,5 s because of remaining pressure in the pipes. The solution can consist in adding valves before each nozzle that can be opened / closed on demand. The valve ensures that there is no remaining flow from the nozzles when it is not required. In addition, it ensures safety in case of failure of the controller or the pump. However it requires the creation of a new interface between the Mission Management subsystem and the Treatment subsystem. Therefore, this would trigger a request for change to the systems engineer to see the impact of creating a new interface between the mission management and the treatment subsystem.

A new interface is created as seen in the following image (valve_cmd).

The design in Modelica results in the following Treatment Subsystem model:

The following results show that the requirements 2 and 3 are now satisfied by the design.

The physical interfaces of the subsystems can be generated from the Modelica model. Here is an excerpt of the physical architecture that corresponds to a specific Modelica design. Note that we may find a lot of other types interfaces (electrical, mechanical, …). In that case, we suggest creating one IBD per physical viewpoint (physical domain). Now the treatment subsystem is « completely » specified (electrical and mechanical viewpoints are missing). We have identified a design solution that can satisfy the requirements. Hence, physical interfaces and sub-components can be built in SysML and traced to the rest of the model.

In this article we have proposed a coupling method between SysML (a Systems Engineering language) and Modelica (a physical modeling language). The proposed method includes a definition of system requirements and logical architecture using SysML and an initialisation of a partial Modelica model for physical architecting. Finally, when the virtual product can be verified against its requirements, this activity can lead to a change management loop with some updates to perform in the system definition (system requirements) with potential impacts on the SysML model.

First, the initial model has been initiated manually from the SysML model. However, it would be possible to have a seamless code generation of Modelica partial models from the SysML models. Indeed, since partial models play the role of specification (interface contracts), it would be possible to adapt the approach to take into account the full process of configuration and change management.

Second, as Cameo Systems Modeler offers FMI co-simulation capabilities, it would be possible to generate and assemble FMUs from the Modelica models produced for some subsystems with FMUs generated from Simulink models (for the control subsystems). This would make it possible to characterize the co-simulation architecture from the logical architecture.

Enjoy MBSE !

This article is part of a monthly series entitled “Advanced MBSE with SysML and other languages“.

In the second set of articles, this series explains how to complete the top-level system definition model, formalized in SysML, with other modeling languages and tools, considered as more efficient to perform the system detailed design or certain kinds of system analysis. Focus is put on digital continuity with guidelines concerning coupling semantics and coupling automation between languages and tools.

In this article 7, we start from a System Definition model developed with SysML and we present an approach, which uses real-time architecture specialised language to refine this definition into an Electric / Electronics and Software Architecture. We use AADL (Architecture Analysis Description Language) as an example, but we could use languages with similar concepts and purposes such as AUTOSAR (used in automotive industry) or UML MARTE.

We present the allocation of timing budgets on SysML models, starting from operational scenarios, and through the concept of Functional Chains. We refine these timing requirements through the definition of physical components and a physical architecture formalised with AADL.

In the previous articles (part 1 to part 5), we have introduced a method based on the SysML notation to support the following systems engineering activities :

This article starts with the availability of a logical architecture for a case study called AIDA (that comes from the Saint Exupery Research Institute). It is illustrated below:

End to end timing requirements are very important non-functional requirements (amongst others) to consider in order to reduce the solution space and to choose the appropriate physical solution. An end to end timing requirement generally specifies the acceptable maximum timing duration expected from an input to a specific output (of a function or a system) following a specific flow path in a specific operational scenario. End to end timing requirements may be imposed by a Stakeholder Requirement or may emerge as a System Requirement to satisfy a Stakeholder Requirement.

Functional chains are playing a key role to specify end to end timing requirements. A functional chain may be seen as an abstraction of a set of execution paths from an SoI’s input to an SoI’s output. In this article, we propose to formalise a functional chain with a SysML block, and the end-to-end timing requirement with a time duration constraint element directly available in the SysML notation, as illustrated below:

Then, we propose to show the realisation of the functional chain with a SysML IBD Diagram. This diagram allows to visualise the end-to-end flow from the selected function/System input(s) to the selected function/System outputs. Each function’s input and output that participate in the functional chain are linked with a dependency link

As an example, the following IBD diagram focuses on the functional chain that controls the position of the UAV and the required thrust value. The end to end timing requirement applies to the whole functional chain, which means in our example that the final solution shall take less than (or equal to) 10 ms to perform the loop. Therefore, it is necessary to divide the timing budget and to allocate a time duration to each function in order to find a solution that can satisfy this requirement.

In practice, the proposed process consists for the System Engineer in defining timing requirements as budgets for all the elements of the overall chain. Then, Systems Designers will have to demonstrate how their selected design meets these execution budgets. This is where we suggest to use AADL language to refine timing properties induced by selected hardware components and software properties. The next paragraph quickly presents the AADL language and the following paragraph provides an application on our example.

AADL (Architecture Analysis and Design Language) is a language dedicated to the modeling and analysis of real-time, safety critical, embedded systems. It is a standard published by the Society of Automotive Engineers as reference AS5506C.

The AADL language relies on both graphical and textual syntax and it includes the following concepts and extensions:

In this article, we focus on the following Base Standard concepts:

pictures from AS5506C standard

pictures from AS5506C standard

pictures from AS5506C standard

To illustrate the full approach, we use a simplified model of an Unmanned Aerial Vehicle (UAV) based on the AIDA case study developed at the St-Exupery Research Institute. We use the following languages and tools:

And Hardware architecture has been defined with inspiration from crazyflie aadl architectural model.

The AIDA Logical Architecture in SysML has been recalled at the beginning of this article. Here we put the focus on the UAV system and in particular the co-design of the electric/electronic and distributed software architecture.

The functional goal is to control the actual position of the UAV to fit the expected trajectory around the aircraft. Therefore, one must find the right control parameters so that the UAV can follow the expected trajectory within an expected maximum timing latency. Then, we want to verify the compliance of the selected hardware and software architecture to the timing requirements.

The AIDA Functional Architecture is defined in SysML and presented below:

In this functional architecture, we detail the position and attitude control functions, as well as the compute thrust and generate thrust functions of the UAV.

The logical architecture is established with regards to emerging systems and sub-systems decomposition. In this example, we decompose the UAV into the following subsystems:

Then, we allocate the functions to the subsystems as follows:

Next, we define the expected maximum latency of the measurement to thrust force control (performance of the control loop) in a specific functional chain defined in SysML, as presented below:

This chain is extended with the duration constraint property defined with a range of valyues between min and max values. In our example: 0ms .. 10ms.

From this step, we perform the design of this system using the AADL language to support software design by taking into account hardware constraints and performing timing verification.

The first step is to translate the functional/logical architectures and functional chains defined in the SysML language toward a logical architecture using the AADL language. This step results in an AADL System Implementation using Abstract Components and end to end flows as illustrated below:

In order to perform this translation, we convert our SysML Flow of Information of type “Trigger” into Event and Flow of Information of type “Data” in AADL Data exchanges. The functional chain is converted to the equivalent concept of AADL end to end flow with latency specification.

| System Engineering Concept | SysML Concept | AADL Concept |

|---|---|---|

| Function | Block | Abstract Component |

| Technical System Element | Block | Abstract Component |

| Trigger Port | Port | Event Port |

| Enable/Disable Port | Port | Event Port |

| Any other Port (Data, Material or Energy) | Port | Data Port |

| Internal Dependency Relation | Dependency | Flow path |

| End to End Functional Chain from operational scenario | Block, IBD | End to end flow |

| Functional Chain Duration | Time Duration Constraint | Latency |

| Functional Flow | Connector | Port Connection Feature |

Once we have created a logical architecture in AADL that is semantically equivalent to the SysML logical architecture, we can start exploring the physical architecture definition using AADL concepts.

We initiate the Physical Architecture from the Logical Architecture and start to design Physical Components (defined as System Components in AADL), one for each Subsystem:

Now we can explore the different physical technical solutions able to refine this logical architecture and we determine the appropriate physical components (hardware and software) which satisfy all the requirements (functional and non-functional such as timing requirements or expected temperature range, safety level of selected devices, constraints due to selected solutions, …).

This exploration space is wide and shall be done carefully with regards to the compatibility of the physical interfaces. In the following example, we select a design solution to minimise the cost and timing execution of the Flight Control Management from Targeted Attitude and Position input up to the generation of the Thrust Force. The engineering goal is to move the UAV with an expected maximum timing budget of 10 ms.

With this design, we propose a mapping from Sub-Systems to Physical Components as follows:

Note : In the Logical Architecture, the Perception Sub-System shall eprform the following internal functions:

According to the expected performance and available/selected technologies, some sensors may provide hardware support for FuseData. In the proposed solution we perform the selection based on costs, so we decide to perform FuseData using dedicated software and we allocate the FuseData function to the Flight Controller instead of Sensors module.

Then, we can design the software architecture while taking into account hardware constraints, and study the technical solutions to implement the logical components (selection of appropriate devices and processors, decompose functions into several processes, allocate processes to one or more processors, define interaction between the software and hardware elements, …). At this stage, hardware and software engineers shall analyse the suitability of the architecture regarding functional and non-functional requirements (such as timing requirements).

For this article, we propose a simplified electronic architecture using the following physical components :

With those choices, we suggest the following AIDA HW Architecture:

AIDA Hardware Architecture in STOOD for AADL

For software design, we suggest decomposing the software in 2 processes (connectivity and flight management). This is mainly due to the following elements:

That is the reason why, in this proposal, we have performed the following decomposition:

The proposed AIDA SW Architecture is presented below:

AIDA SW Breakdown Structure

AIDA SW Architecture in Ellidiss STOOD for AADL

Finally, we can perform the binding in a 3 steps process:

1. Allocate Software Processes to Hardware Processes

2. Perform correspondence/allocation between Abstract components (translated from SysML logical components) and Hw/Sw components.

3. Verify the consistency of the design by checking that all the logical components are bound to the Processes and the hardware components.

AIDA UAV Hw/Sw Architecture

Now, it is possible to use integrated AADL analysis (static analysis, scheduling analysis) thanks to some AADL compatible simulation tools such as AADL Inspector.

We can analyze the compliance to the expected end to end timing requirements :

And then, perform scheduling analysis with appropriate simulator to check the suitability of the technical solution parameters. Indeed, defining appropriate scheduling properties may be difficult to achieve because hardware elements have physical limits (sensors sampling time, …) and software timing properties (priorities, worst case execution time, data transmission delays, periods …) are not obvious to determine. Moreover, you may encounter feasibility issues to satisfy the allocated timing budget if the System Engineer does not have these constraints in mind.

In this example of scheduling simulation, we can observe that some deadlines are missed , which was not obvious to determine in the initial allocation phase.

The Systems Architect has to analyse the impacts of change requests from the proposed hardware/software solutions.

In this example, the systems engineer has performed an initial allocation of functions to logical components based on his initial knowledge. Then, the Hardware / Software Design team has performed analysis of existing components, devices and software components which exist as libraries. In our case, the analysis has shown that there is a mismatch between initially expected interfaces and provided interfaces by the selected sensor device (which implements Perception Subsystem). So, Hardware/Software design team performs a proposal to change initial allocation of FuseData function from Perception Subsystem to Flight Control Management subsystem.

So we update the Logical Architecture in SysML accordingly, as shown in the following figure:

In addition, some timing budgets between subsystems can be adjusted regarding the feasibility of the proposed solutions when possible. Some changes in timing requirements or in interface definitions may have consequences on other engineering specialities or on other components. So, this impact analysis is not always straight forward (rather iterative).

In this article we have proposed a coupling methodology between SysML (a Systems Engineering language) and a hardware/software design language (AADL). Note that this method can easily be tuned for other languages with similar concepts (real-time, scheduling, …) such as AUTOSAR or UML MARTE.

The proposed method includes a definition of system requirements using SysML (including end to end timing requirements with the Functional Chains concept) and an initialisation a specialised model for hardware/software architectures (and associated network topologies). This translation allows to study the definition of the detailed design in the AADL language in order to benefit from suitable concepts and various timing analysis capabilities available in AADL toolboxes.

Finally, when the virtual product can be verified against its requirements, this activity can lead to a change management loop with some updates to perform in the system definition (system requirements) with potential impacts on the SysML model.

To complete this present work, we will later refine the analysis of the mapping between Data Types defined at System Level and Resulting Data Types from the selected design (in particular, study the influence of selected implementation types like how to implement a Real value from system to a fixed point 32 bits data and verify the suitability of the selected implementation type regarding accuracy requirements).

In other further works, we plan to investigate how to create an initial physical architecture in SysML model by defining components libraries (ECUs, sensors, mechanical components, …), network topologies and how to convert this in AADL “world”. Then, we will propose adequate automations to perform the “bridge” from SysML to AADL based on mappings (still to refine).

We also intend to explore in more detail the other AADL language capabilities and associated annexes such as the behavioural annex (how to initiate AADL modes management from SysML behavioural description) or Error Modelling Annex (how to coordinate Systems Safety Analyses, Systems Engineering model and Safety Analyses for hardware/software design in AADL).

Enjoy MBSE !

We are warmly grateful for the support of the Ellidiss company and in particular Pierre Dissaux concerning the AADL modeling and simulation activity in the context of this article.

A special thanks to Jerôme Hugues for his advice and interesting discussions about these topics.

This article is part of a monthly series entitled “Advanced MBSE with SysML and other languages“.

In the second set of articles, this series explains how to complete the top-level system definition model, formalized in SysML, with other modeling languages and tools, considered as more efficient to perform the system detailed design or certain kinds of system analysis. Focus is put on digital continuity with guidelines concerning coupling semantics and coupling automation between languages and tools.

In this article 6, we start from a System Definition model developed with SysML and we present an approach, which uses Simulink to define or refine part of the system’s behaviour such as the control loop of the system in its environment. We discuss 2 different ways of using Simulink.

In the previous articles (part 1 to part 5), we introduced a method using the SysML notation to support the following systems engineering activities :

This article starts with the availability of a logical architecture for a case study called AIDA (coming from the Saint Exupery Research Institute). It is illustrated below:

Once a logical architecture has been defined, Systems Engineers start to communicate it to the various specialists involved in the system detailed definition (software engineers, mechanical engineers, command-control engineers, hardware engineers, …). These specialists will have to analyze the system requirements (including interfaces definition, expected behavior and associated performance) and will verify the requirements feasibility (is there a solution that can satisfy all of these requirements?).

In this article we focus on the Control Engineer. This specialist applies Control theory to one or several components of the system architecture and on its environment. He needs to define equations, reuse operators and generic components from libraries and toolboxes, use solvers and timed simulation, access optimization tools, use matrices based computations, etc. All these features are offered by math based simulation tools. Among these tools, we choose to restrict our focus to the usage of the MathWorks MATLAB/Simulink/System Composer suite as it is the most commonly used in the industry today, as far as we know.

In the next paragraph we detail a process to refine the definition of internal control. It contains the following steps:

In this first approach, the Logical Architecture of the SysML model is translated into a Simulink model while preserving the allocation of system functions to logical components (subsystems). Simulink component libraries are used to refine the functional behavior. Then, the time-based simulation is used to verify that interfaces between system functions and between subsystems are consistent, and that it exists a solution that can satisfy the system functional and performance requirements. The interface definitions can be confirmed or refined by the control engineer.

In the second approach, we still transition to Simulink but with 2 different steps and usages of Simulink. First we use System Composer (Simulink facet) to characterize and assess the architecture, thanks to features like multi-views, filtered view and analysis. Second, we use the “more traditional” Simulink component libraries to refine the function’s behavior.

The expected benefit of using the System Composer facet is a better separation of concerns: in the first step, the logical architecture can be characterized with the support of stereotypes on ports or on connectors, and assessed with the support of analysis features like “dynamic consistency checks of interfaces”. In the second step, the different components and their allocated functions can be refined, especially for the behavior, with the support of a wide diversity of generic component libraries, patterns and other useful features.

Note: each step has its own interest and may be performed by different users with different experiences.

To illustrate and give elements of comparison between these 2 scenarios, we use a simplified model of control for the trajectory of an Unmanned Aerial Vehicle (UAV) based on the AIDA case study developed at the St-Exupery Research Institute. The AIDA Logical Architecture in SysML has been recalled in the context at the beginning of this article. Here we put the focus on the control loop between the perception subsystem, the Flight Management Subsystem and the Thrust Management Subsystem.

The goal is to control the actual position of the UAV to fit the expected trajectory around the aircraft. Therefore, one must find the right control parameters so that the UAV can follow the expected trajectory within a minimum error margin (that shall be defined in the performance requirements).

First, we define the scope of the transformation between the SysML logical architecture and the Simulink Model because we do not need to translate the full SysML model. We restrict the scope of this transformation to the sole functions and components useful for the UAV trajectory control loop (including the Air / Terrestrial Gravity model to reflect the physical environments effect on the control loop).

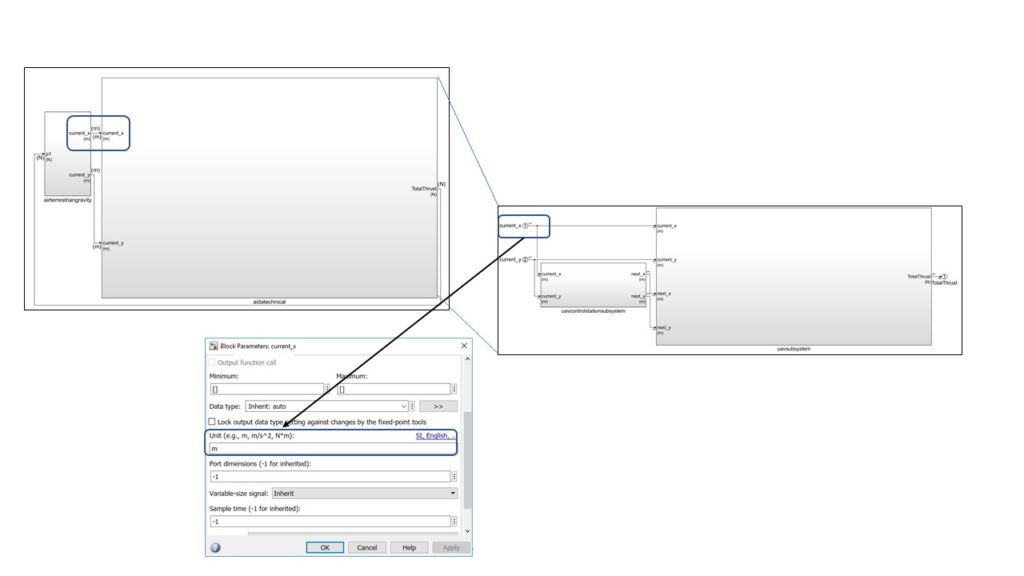

Note: in this SysML model, ports have been split (for instance current x and current y which rely on a Current Position flow) in order to be able to use the automations available in the tool.

Concerning the specification of the interface types and units, the SysML tool (Cameo Systems Modeler) provides access to the ISO 80000 Standard Units :

The SysML logical architecture can be translated to the MathWorks Simulink modeling environment through two different methods:

With this method, it is possible to create a logical architecture semantically equivalent to the SysML model (same components and interfaces) as illustrated below:

One of the main benefits of this approach is the preservation of the stereotypes initially defined in the SysML model. For example, in the Functional Architecture model of our case study, we have defined the following stereotypes on function inputs and outputs: Information, Energy, Material.

Within System Composer, it is possible to define the same stereotypes and apply them to the System Architecture (functions or component interfaces) :

System Composer also offers a feature to filter different views of the same system architecture, which is very useful to ease the architecture reviews:

With this method, we can use some automations available out of the box in Cameo Systems Modeler (the SysML tool we have used) to create the Simulink Model skeleton from the SysML filtered model (model filtered with the UAV trajectory scope).

If data types and units have been specified in SysML, the automated transition propagates the data types and units in the Simulink model:

But without system composer, the additional semantics defined in the SysML model through the stereotypes are lost.

In both cases (with or without system composer), the resulting model contributes to the specification for the control engineer.

Next, we complete the functions behavior with existing assets/knowledge in control such as PID controllers and we refine the associated parameter values thanks to the support of the simulation.

Once the simulation seems to satisfy the requirements expressed at the logical level, it is possible to derive new lower-level requirements. For instance, it may be possible to add requirements on stability, or on expected control accuracy. The PID’s parameters can be finalized only in a physically realistic environment. However, the simulation gives an idea of the feasibility and and of the range of values to be implemented later.

The Systems Architect has to analyse the impacts of change requests from the control engineer. Some changes in requirements or in interface definitions may have consequences on other engineering specialties or on other components. So, this impact analysis is not always straight forward.

This discussion is based on the use of the following tools and configurations:

If your MBSE method is still in the definition stage, and if there is a need to go from SysML logical architecture to Simulink in order to benefit from mathematical-based simulation tools, it is clear for us that System composer is the right target from SysML. System Composer is the MathWorks tool that can preserve the SysML stereotypes put on the logical architecture (components and interfaces) and thus provides good support for architecture views and analysis in the Simulink environment. As soon as we can get some automation to support this transition between SysML and System Composer and some features to check in a static way the consistency of interfaces, we strongly recommend this way of transitioning from SysML to Simulink.

Else, in case you need to go from SysML to Simulink today, with the capabilities provided by Cameo Systems Modeler V19SP4 and MATLAB/Simulink 2020a, it is probably more efficient to use the “traditional” (direct) transition from SysML to Simulink, thanks to the automations that exist to support part of this transition.

In this article we have discussed the transition from a Logical Architecture formalized in SysML, to a Simulink model limited to the structure (components, their allocated functions, and their associated interfaces). Note that export from Cameo Systems Modeler to Simulink (with direct transition) also supports the translation of behavioral elements such as constraint blocks and state machines. Those aspects will be detailed in a future article.

Concerning multi-physical aspects, we plan to explore the usage of the SysPhs standard which is available in Cameo System Modeler through the SysPhsLibrary to support the automated transition of physical elements (structure and behavior) to MathWorks tools.

Additionally, we plan to explore in further detail the change analysis process that is performed when there are updates of the System Logical Architecture in the SysML model, and the possible consequences on the Simulink model. We will focus on the method but also on the tools able to support the difference/merge between SysML and Simulink models.